On 13 March 2024, the European Parliament officially approved the AI Act, the world's first regulation on artificial intelligence, with an overwhelming majority. The AI Act is a set of regulations designed to govern the deployment and use of AI systems – its mission is to both recognise the transformative potential of AI across various sectors, including healthcare, transportation, manufacturing, and energy, while also acknowledging the need for robust oversight to ensure the technology's safe and ethical utilisation.

What you need to know

The AI Act introduces a risk-based approach to AI regulation, categorising AI systems based on their potential impact and level of risk. Under the Act, AI systems are classified into four main categories, each subject to specific regulatory requirements:

- Unacceptable risk: AI systems deemed a threat to individuals or societal values will be banned. This includes systems involved in cognitive behavioural manipulation, social scoring, and real-time biometric identification.

- High risk: AI systems posing significant risks to safety or fundamental rights fall into this category. Examples include AI used in critical infrastructure management, law enforcement, and legal interpretation, as well as AI systems used in products falling under the EU's product safety legislation, such as toys, aviation, cars and medical devices. High-risk AI systems will undergo rigorous assessment and must adhere to stringent regulatory standards before being put on the market.

- Generative AI: While not classified as high risk, other AI systems, including but not limited to generative AI systems like ChatGPT must comply with transparency requirements and EU copyright law.

- High-impact general-purpose AI: Advanced AI models with the potential for systemic impact, such as GPT-4, are subject to thorough evaluation and any serious incidents would have to be reported to the European Commission.

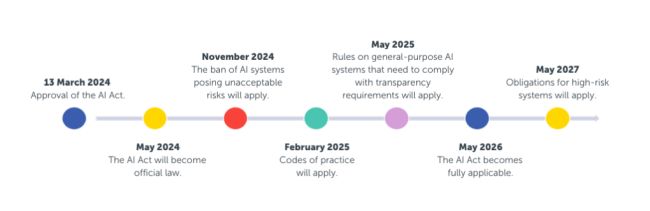

The timeline of the AI Act's adoption

The AI Act is set to come into effect this May, marking a significant milestone in AI governance. Implementation will be overseen by national authorities, supported by the AI office within the European Commission. Member states have 12 months to nominate oversight agencies responsible for enforcing the regulations.

The approved text will undergo formal adoption by Parliament during an upcoming plenary session and it will become fully enforceable 24 months after its entry into force, with certain aspects taking effect earlier:

- The prohibition of AI systems posing unacceptable risks will be enforced six months after its entry into force.

- Codes of practice will come into effect nine months after the AI Act's entry into force.

- Rules on general-purpose AI systems, which must adhere to transparency requirements, will be implemented 12 months after its entry into force.

High-risk systems will be granted additional time to ensure compliance, with their obligations becoming enforceable 36 months after its entry into force.

Fines

The AI Act will impose strict penalties for non-compliance with fines of up to €35 million or 7 per cent of worldwide annual turnover. Other breaches could incur fines of up to €15 million or 3 per cent of global turnover, depending on which is higher.

The European Commission's news article can be found here.

The European Commission's press release can be found here.

Our previous blog post on the AI Act can be found here.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.