Welcome to the November/December 2022 newsletter prepared by Arnold & Porter's AdTech and Digital Media group. This newsletter includes legislative, regulatory and case law developments relevant to the AdTech and Digital Media industries, the state of M&A activity in the AdTech and MarTech industries and updates regarding Arnold & Porter's activity in the space.

Legislative

Senate Report on Domestic Terrorism Criticizes Social Media Business Models. A report by the Senate Committee on Homeland Security and Governmental Affairs focused on the rising threat of domestic terrorism and considered the impact that social media has had on the spread of extremist content. The report considered four large social media companies and concluded: "This report also finds that social media companies have failed to meaningfully address the growing presence of extremism on their platforms. These companies' business models are based on maximizing user engagement, growth and profits, which incentivizes increasingly extreme content—and absent new incentives or regulation, extremist content will continue to proliferate and companies' moderation practices will continue to be inadequate to stop its spread."

Withdrawal of Journalism Competition and Preservation Act ("JCPA" or the "Act"). Momentum for adoption of the JCPA stalled in early December when it was left out of a bicameral agreement on defense-spending legislation. The JCPA would have allowed eligible news media outlets to collectively negotiate regarding use of their content with covered online platforms, such as Facebook. The Act, sponsored by Senator Amy Klobuchar and co-sponsored by senators from both parties, would have carved out an exception in antitrust law by giving eligible publishers and broadcasters the ability to collectively negotiate with covered online platforms regarding the pricing, terms and conditions by which the covered online platforms could access their content. Proponents of the Act argued that social media platforms like Facebook are responsible for the decline in advertising revenue of many news publications and media outlets. The Act was opposed by trade groups NetChoice and the Computer & Communications Industry Association, and a number of other organizations, claiming it was flawed on a number of grounds. Included among these were that it would force platforms to negotiate with, and carry content of, digital journalism providers regardless of how extreme their content was, it would permit large media conglomerates to dominate negotiations at the expense of small media outlets, and it favors large broadcasters over other forms of journalism.

Legal Challenge to California Age-Appropriate Design Code Act (AB 2273). As described in our September newsletter, the California Age-Appropriate Design Code Act (CAADCA) was signed into law on September 15, 2022, in order to promote online privacy protection for minors. The law's provisions generally become operative on July 1, 2024. The tech industry group NetChoice has filed a lawsuit seeking to enjoin enforcement of the Act on various grounds. According to the complaint, the Act contains a number of infirmities, such as the following:

- It violates the First Amendment by impermissibly telling sites how to manage constitutionally protected speech, and by impermissibly "deputiz[ing] online services providers to act as roving internet censors at the State's behest";

- It is void for vagueness under the First Amendment and the Due Process Clause;

- It violates the Commerce Clause because it "seeks to impose an unreasonable and undue burden on interstate commerce";

- It is preempted under the Children's Online Privacy Protection Act (COPPA) and Section 230 of the Communication Decency Act;

- It violates the Fourth Amendment by requiring business to provide commercially sensitive information to the Attorney General "on demand without any opportunity for precompliance review by a neutral decisionmaker"; and

- It violates various provisions of the California Constitution.

FTC Developments

Epic Games to pay $520m in penalties for alleged COPPA violations and refunds to users for alleged unwanted user charges. On December 19, 2022, the FTC announced that it had entered into an agreement with Epic Games, the maker of the popular Fortnite video game, that included a $275 million penalty for alleged COPPA violations and an obligation to refund users $245 million for charges they were allegedly duped into incurring as a result of Epic Games' deployment of dark patterns. (See our September Advisory for a discussion of the FTC's focus on digital dark patterns.) The penalties stemmed from a federal court action in which the FTC alleged that Epic Games collected personal data of children under 13 without obtaining parental consent, and that Epic Games engaged in unfair practices through use of default text and voice communications that resulted in children and teens being exposed to bullying, threats and harassment by strangers they were matched with. The court order prohibits Epic Games from enabling voice and text communications for children without parental consent, or for teens without consent of the teens or their parents. The refund obligation stemmed from a separate administrative action, in which the FTC alleged that Epic Games used various dark patterns to trick users into making unwanted in-game purchases, such as through use of confusing button configuration. Prior to 2018, Epic Games also allowed children to purchase online currency without parental or card-holder consent. The FTC also alleged that Epic Games engaged in other problematic billing practices, such as blocking users' accounts when they disputed charges, which caused users to lose access to online content they had purchased.

Case Law Development

More on Gonzalez v. Google (CDA Section 230). As we described in our October newsletter, the Supreme Court has agreed to hear a case that addresses whether Section 230 of the Communications Decency Act of 1996 shields interactive computer services that use algorithms to target and recommend third-party content to users. The Chamber of Progress, a US-based trade group that represents technology companies, recently wrote a letter to Attorney General Merrick Garland arguing that the decision had important implications for access to reproductive health information online and calling on the United States Department of Justice to file a brief in support of defendants. The letter, dated November 21, 2022, states that an erosion of Section 230's protections would open the floodgates to liability for online service providers and websites that allow reproductive health information on their platforms. The Chamber of Progress writes that this would lead to a "devastating reality for women seeking reproductive resources in states where they are unavailable."

EU

EU Privacy Regulators Threaten Meta's Targeted Ad Model. In early December, a board representing EU privacy regulators has deemed that Meta violates Europe's basic privacy law, the GDPR, notably by processing personal user data to serve personalized ads. Users can opt out of personalized ads they are served based on activity of third-party websites, but do not have an opt-out ability based on their activity on Meta's own platforms. Meta does not rely on user consent for this type of personalized advertising because it claims that personalized ads are necessary for the performance of its contracts with users. The board rejected "performance of contract" as a valid basis under the GDPR to serve personalized ads and directed Ireland's Data Protection Commission to issue binding orders against Meta and impose fines. The Irish Commission will have to follow the board's decision, which has not yet been published, but appeals can still be lodged.

Other

Report Advocates Use of Expert Panels for Content Moderation. A paper published by the Stanford Institute for Human-Centered Artificial Intelligence summarized a study focused on the perceived legitimacy of social medial content moderation policy and concluded that respondents believed that expert panels were the most legitimate of the four types of content moderation processes they considered. The other three types considered were paid contractors (i.e., individuals hired and trained by the social media company), automated systems (i.e., algorithms) and digital juries (i.e., ad hoc groups of users). The study used a metric called "descriptive legitimacy" (also referred to as "perceived legitimacy"), which is comprised of the following five parts: "satisfaction (with how the organization handled the decision), trustworthiness (of the organization), fairness and impartiality (of the organization), commitment to continue having the organization, and belief in maintaining the scope of the organization's decision-making powers (i.e., decisional jurisdiction)." Respondents considered algorithms to be the most impartial moderation process, subject to caveats based on how the algorithm was designed and the ability for human review. However, algorithms lagged expert panels on overall legitimacy. The paper issued a recommendation to social media companies to use expert panels as components of their content-moderation policies.

Markets

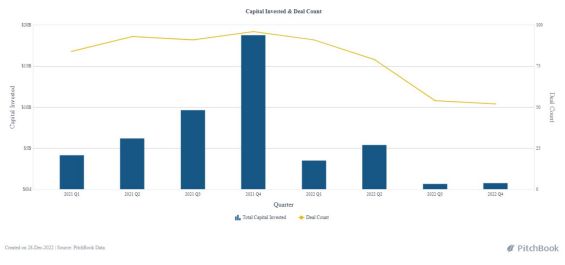

Based on data provided by PitchBook, AdTech and MarTech M&A involving change of control transactions in (a) the US (see Figure 1 below) and (b) the US, EU and Asia combined (see Figure 2 below), measured both by dollar volume and deal count, is significantly down in CY2022 over CY2021.

(Figure 1: U.S. Only)

(Figure 2: U.S., Europe, Asia)

Arnold & Porter Updates

- A&P Securities Enforcement & Litigation partner Christian Schultz was quoted in The National Law Journal article "Social Media Influencers' $100 Million SEC Charge Hints at Tighter Enforcement Going Forward." The article discusses the seven influencers charged with fraud after the Southern District of Texas District Court filed a criminal complaint against them for running a "pump and dump" scheme against their followers.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.