Popular AI tools: What about data protection?

Tools such as ChatGPT and Microsoft Copilot are the obvious choice for using AI in the workplace. But what about data protection compliance? We have taken a closer look at the most popular tools and summarized our findings. We also offer our internal tool for your use. This is part 2 of our AI series on the responsible use of AI in the company.

The requirements for use in your company

Anyone who wants to use AI tools in the workplace should carefully examine the contractual conditions. This involves key questions such as:

- "What am I allowed to do with the output of the tools according to the terms of use?"

- "Who has access to the information entered (input) and the newly generated content (output) and for what purpose?"

- "How is data protection guaranteed when processing personal data?"

As we show below, the responses to these questions vary widely and are not always satisfactory. In addition, a Data Processing Agreement is usually required for the processing of personal data; this is no different for AI online tools than it is for any other offer in the cloud or from a provider that processes personal data.

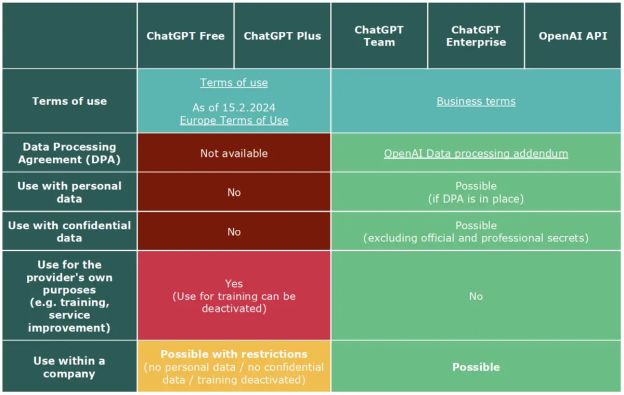

ChatGPT in the workplace

ChatGPT from OpenAI is offered in different versions, from free to paid versions for companies. There are important differences between these versions that should be noted, particularly when processing personal data and business secrets. Both the free and paid versions for private customers are not suitable for processing personal data and other confidential data in companies. With OpenAI, neither "ChatGPT Free" nor "ChatGPT Plus" can be covered by a Data Processing Agreement ("DPA"), which is required for companies in such cases under the Swiss Data Protection Act (and the GDPR).

Only the versions designed for companies enable compliance with data protection in accordance with the legal requirements in Switzerland. Only with the versions for companies, such as "ChatGPT Enterprise" or the "OpenAI API", is it possible to agree such a contract (DPA), which legally secures the use of personal data in the chat or via the API. It should be noted here that only the terms of use of the business versions guarantee that the data entered will not be used for other purposes (i.e. OpenAI purposes), such as the further development or training of the service. In addition, the terms of use of the business versions contain a confidentiality obligation for OpenAI, although it is ultimately up to the company to decide whether to trust the provider and disclose business secrets. Unfortunately, the confidentiality obligation is not yet sufficient for Swiss official and professional secrets, such as those held by lawyers, doctors, banks or public administrations.

Since January 10, 2024, OpenAI has been offering "ChatGPT Team" for smaller companies and teams. Like ChatGPT Enterprise and the OpenAI API, ChatGPT Team is made available under the terms of use of the business versions and it is, therefore, also possible to enter into the DPA for ChatGPT Team. The content is not used for training and there is a confidentiality clause.

In the version for companies for Swiss customers, the Irish subsidiary OpenAI Ireland Ltd. is the contractual partner, which may represent a legal advantage. The DPA is now also automatically concluded with the party with which the service contract also exists. The EU Standard Contractual Clauses for data exports to the US (EU SCC) continue to be agreed directly with OpenAI, LLC, as the data processing continues to take place in the US (however, we assume that in reality the Irish subsidiary is the exporter and in turn has the EU SCC with its US sub-processors, which according to the list of sub-processors includes its US group companies). However, OpenAI has not really resolved the matter cleanly and transparently. For example, the DPA is neither signed by OpenAI, LLC nor by OpenAI Ireland Ltd. but yet by another group company, which means that it can only be established indirectly that OpenAI Ireland Ltd. is contractually bound by the DPA, as is legally required. This is a pity and could have been avoided easily.

For the ChatGPT Free and ChatGPT Plus private offers, the US company is currently still the provider of the service for users in Switzerland. However, according to the new "Europe Terms of Use" already available, this is set to change from February 15, 2024, meaning that the Irish subsidiary will be responsible for users in Switzerland and the EEA.

Click here for a version with clickable links.

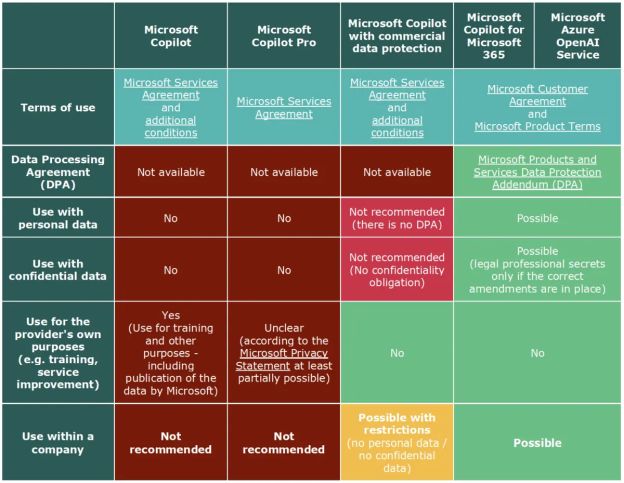

Microsoft Copilot and Azure OpenAI Service

A similar, but not quite the same picture can be seen at Microsoft: here too, different contractual regulations apply to the various AI tools available. Anyone using "Copilot" (previously "Bing Chat"), which is freely available via the Edge browser, not only allows their input and output to be used to improve and train the service, but also gives Microsoft a license to use the input and output for its own purposes and, in particular, to publish it. This version is therefore not suitable for use in companies. In addition, Microsoft has been offering "Copilot Pro" for private users for private M365 licenses since January 16, 2024. Copilot Pro is purchased under the Microsoft Services Agreement for private customers and is only available in private subscriptions. Copilot Pro is not suitable for business use because it lacks the necessary agreements.

For business use, the Microsoft "Copilot with commercial data protection" (previously "Bing Chat Enterprise") exists in the Edge browser, which is included in the M365 products for companies, for example. To use the Copilot with commercial data protection, the user logs in with the business M365 account and the following is then displayed:

"Your personal and company data are protected in this chat."

This could lead to the conclusion that Copilot with commercial data protection is covered under business contracts such as the Microsoft Customer Agreement (MCA) and the well-known Data Processing Addendum (DPA) from Microsoft and that there is therefore a reasonably solid contractual agreement (as applies to the business use of M365, for example). However, this is not the case, because when using Copilot with commercial data protection, the individual users must accept additional terms of use, whereby they agree to the Service Agreement for private customers.

In these additional terms of use concluded by the user, Microsoft assures that, unlike with Copilot for private customers, the data will not be used for Microsoft's own purposes and will only be stored for as long as the browser window is open. This means that the data is not used to train the AI. Unlike the private version, no license is granted to Microsoft to use the input and output. However, the Copilot with commercial data protection is expressly excluded from the DPA required for companies when using processors, and Microsoft considers itself to be the controller of the data processing.

This raises a number of data protection issues, as Microsoft should generally be involved as a processor when used in companies (as is the case with M365, for example). For companies, this means that they are generally not allowed to use personal data in Copilot because a DPA would have to be concluded for such use. However personal data is not completely unprotected: the Irish Microsoft company, which is the contractual partner, is subject to the GDPR and thus to appropriate data protection. However, it is considered a third party under data protection law - and companies in particular will not want to simply hand over their personal data to third parties.

In addition, neither the Microsoft Services Agreement nor the terms of use of Copilot with commercial data protection contain a confidentiality clause and therefore the confidentiality of the data is not contractually guaranteed. The main advantage of this version therefore lies solely in the fact that Microsoft does not use input or output for its own purposes in accordance with the terms of use and does not receive any rights to the input or output. However, as these terms of use must be regularly accepted by the individual user, they can also be changed just as easily by Microsoft.

Only the use of "Copilot for Microsoft 365" or "Azure OpenAI Service" offers contractual protection in terms of data protection and confidentiality for companies. These services are obtained under business contracts such as the aforementioned MCA and are subject to the aforementioned Microsoft DPA. In addition, these services offer the option of concluding additional agreements for companies with special requirements, such as professional secrecy (e.g. lawyers, doctors, banks).

Copilot for Microsoft 365 is available to all Swiss businesses as of January 16, 2024. However, Microsoft is not really transparent about the legal status and contractual terms for its AI tools (e.g. Copilot with commercial data protection) and keeps changing them and their product names. This makes it unnecessarily difficult for most business users to find their way around. However, with the availability of Copilot for Microsoft 365, there is now a product that can be used in a company with existing commercial contracts (including DPA). It is important to ensure that users are clear about the difference between Copilot with commercial data protection and Copilot for Microsoft 365, as only the latter has the necessary contractual provisions for data protection compliant use in a company. It is also quite expensive.

Click here for a version with clickable links.

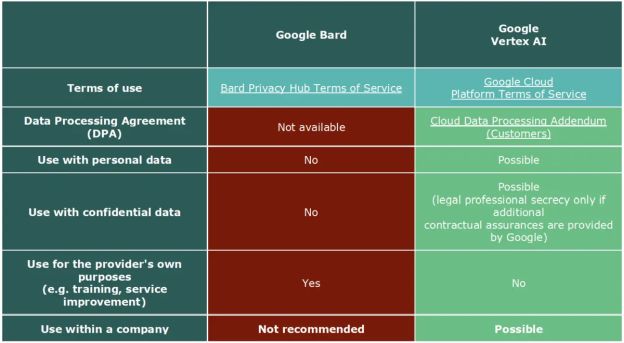

Google Bard and Vertex AI

Google offers "Bard", a free AI chatbot that is primarily designed for private users. This service is not suitable for the use of personal data and confidential information, as Google expressly points out. Bard can therefore only be used to a limited extent in everyday working life - namely without processing confidential data or personal data.

"Vertex AI", on the other hand, is an enterprise service from Google that provides different AI models. Vertex AI is used under the terms of use of the "Google Cloud Platform" and Google's data processing agreement (DPA). Service-specific conditions must also be observed. Vertex AI can also be used with personal data and confidential information. However, for companies subject to legal professional secrecy, additional contractual assurances from Google are required in order to meet the legal requirements.

Click here for a version with clickable links.

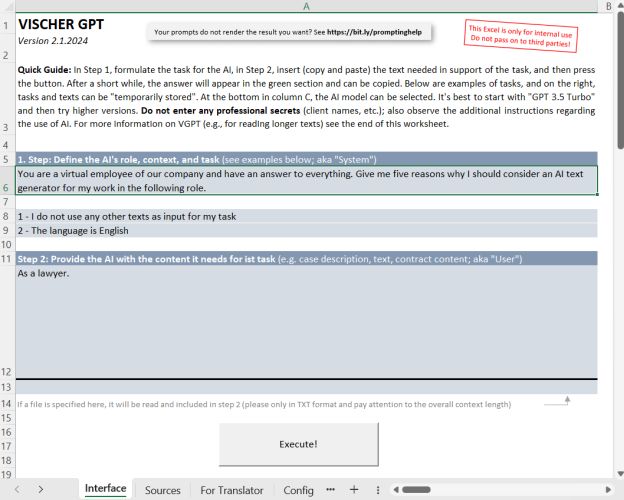

VISCHER GPT

Originally, we only developed this tool for our own internal use. It is an Excel-based tool with which the various GPT models of OpenAI can be used for processing longer than usual texts. VISCHER GPT or VGPT, as we call it, is an AI text generator, i.e. it is not possible to chat with the AI. Rather, it can be given a task and content (e.g. a contract to be analyzed). Files in TXT format can also be imported. The tool then produces a response.

Due to popular demand, we are now making this tool available to everyone free of charge as open source. It can be downloaded here in English and here in German. For it to work, Excel files with macros (i.e. program code) must be permitted. The macros have been digitally signed by us, VISCHER AG, so that the internal IT department can put them on the white-list (the execution of macros is restricted in many companies for security reasons). VGPT only works on Windows computers due to some restrictions of Excel on MacOS.

In order for it to be used, it must be configured on the "Config" worksheet, i.e. provided with an API key from OpenAI (www.openai.com). This is the access code to the computer interface of the GPT language models from OpenAI. To obtain such an API key, an account with OpenAI is required, although this can be created free of charge. If you want to use the newer models, in particular "GPT 4 Turbo", which can also process long texts (which is supported by VGPT), you have to pay for your account, but the price is not that high and the API key can be shared by several people at your company. This way, much less has to be paid than if the company has to buy a "ChatGPT Team" or "ChatGPT Enterprise" account for every user (and they still get the latest GPT models or eventually even previews that are not available otherwise). Another advantage is that access is very easy: VGPT can be saved on the desktop as is, even with different content if required.

From a data protection perspective, the major advantages of using GPT via API are that, first, this is possible with a data processing agreement as mentioned above and, second, OpenAI promises its users not use the input and output for training its models. Although both are now also possible when using "ChatGPT Team" or "ChatGPT Enterprise", as explained above, taking this route means that a seperate Team or Enterprise account is not required for every employee.

Furthermore, the contractual partner is no longer OpenAI, LLC in the USA, but its Irish subsidiary; the DPA is now automatically concluded with the party with which the service agreement also exists (see more on this above). However, data processing will continue to take place in the USA.

This means that the use of VGPT (or another such tool) as a tool for using the GPT models via API is better in terms of data protection; the same is in principle true also for other services offered here in Europe, which provide for a DPA and can ensure that there is no use of data for training purposes.

However, professional secrets should not yet be entrusted to OpenAI in this setup, as the necessary technical and contractual safeguards are not yet in place. Another restriction when using VGPT is that it is not possible to monitor the use of VGPT unless special precautions are taken because no personal account is used. In addition, employees are not allowed to pass on VGPT to others, as it contains the organization's confidential API key.

For us at VISCHER, VGPT is still only an interim solution until we have implemented an AI solution later this year that is operated entirely in our own private cloud environment and can therefore also be used in compliance with our professional secrecy obligations. We will also soon try to negotiate a standardized amendment with a major provider for their AI services that are suitable for professional secrets.

How to implement AI tools at work

What should you do to implement AI tools in your own company? We recommend the following three steps:

- Step 1 - Select tools: If necessary, external support is used to check which AI tools should be made available to employees or permitted for their work. We recommend using Microsoft "Copilot with commercial data protection" for non-personal-data requests (because it's free), and tools and services (like VGPT) that are based on the OpenAI API or Google's Vertex AI (because of the DPA and commitment not to use input and output for training purposes) – or, of course, "private" solutions (where a company has the means and experience to implement them; most smaller companies will not, however). Microsoft's "Copilot for Microsoft 365" is very interesting, but probably too expensive for most companies.

- Step 2 - Prepare for use: Once the tools have been selected, the necessary contracts must be concluded and the tools configured for use.

- Step 3 - Instruct employees: To ensure legally compliant use, employees must be instructed on how they may use these AI tools. This includes instructions on which categories of data and third party content (e.g., copyright protected work) are permitted for which tool, as well as guidelines on how to handle AI chatbots and text generators in general. We at VISCHER, for example, have conducted a short training course on this for everyone (which we now also offer to other law firms and legal departments due to demand, see here. See also our first blog post in this series which contains a free training video to that point. In the next part of this series, we will also publish a one-page directive that can be used to regulate the use of AI tools.

Keep in mind: Providers of AI change and modify their offers and contracts regularly and at very short intervals. It is therefore essential to check contracts in detail when they are concluded and also subsequently when changes are made.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.